1. Introduction

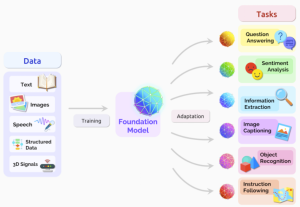

Machine learning, encompassing LLMs, is indispensable to many contemporary information systems and applications. LLMs including ChatGPT are capable of generating natural language for use in chatbots, text summarization, and other writing tasks. Despite their sophistication, these models remain susceptible to limitations, highlighting the necessity of enhanced security measures.

Afield of study centered on analyzing and counteracting assaults on machine-learning systems. Attackers aim to manipulate the model or the input to produce harmful outputs. Adversarial induction prompts in the context of ChatGPT can result in the model generating inappropriate or dangerous content, endangering security and the spread of false information.

Researchers investigate the ability of launching assaults on ChatGPT and propose two strong mitigation tactics. The first method involves a learning-free prefix prompt mechanism that prevents toxic text generation., The RoBERTa model serves as an external detection mechanism to identify manipulated or false inputs. These measures improve ChatGPT’s capacity to withstand deceptive attempts.,

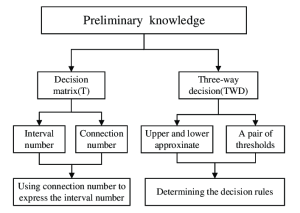

2. Preliminary Knowledge

This portion offers an introduction to ChatGPT and adversarial ML. A revolutionary language model created by OpenAI, ChatGPT represents the pinnacle of LLM innovation. Combining the Transformer architecture with extensive textual data enhances its capabilities in NLP.,

Instead of purely optimizing model performance, adversarial machine learning also considers fortifying models against malevolent assaults. Attacks can be launched through membership inference, model poisoning, or model stealing. Effective defense strategies involve implementing techniques like adversarial training, input preprocessing, and model robustness evaluation.

3. ChatGPT faces adversarial attacks which threaten its language model capabilities.

ChatGPT and other similar models are particularly prone to manipulation through carefully crafted input., Incremental alterations to the input can have profound effects on the output., The presentation showcases cases of abusing ChatGPT by crafting misleading queries.

Recent incidents prompt questions about the safety and moral ramifications of massive language systems. Adversarial attacks left unchecked could result in unethical content generation by ChatGPT. Our experimentation reveals how inducing attacks impacts ChatGPT’s behavior.,

4. Large Language Model Defense Methods

Two approaches are suggested to counter ChatGPT’s limitations. The first approach is the “learning-free prefix prompt” mechanism. Carefully crafted prompts help identify and exclude improper or guiding inputs before the model produces its answer. The technique safeguards the model against deceptive inputs.,

Bert’s improved form, RoBERTa, forms the basis of this alternative method. Training RoBERTa on identifying manipulative inputs enables us to detect adversarial attacks. An outside detection framework flags suspicious prompts for elimination before they access ChatGPT, strengthening the platform’s safety measures.

5. Experiments

Researchers investigate the efficacy of proposed defense systems through experiments. The evaluation framework encompasses these four performance indicators. Employing actual conversation content enables us to educate and evaluate ChatGPT’s detection model through adversarial attack simulations.,

ChatGPT’s resilience against adversarial attacks is improved by learning-free prefix prompts and RoBERTa-based defense mechanisms. The RoBERTa-based strategy demonstrates remarkable detection prowess and prompt development of a solid defense mechanism.

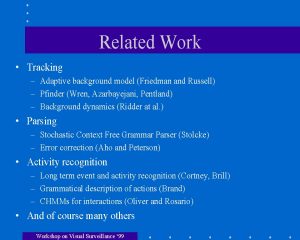

6. Related Works

,Research on massive natural language models and malicious automatic learning is reviewed here. LLMS can be made more effective through various means, including secure query methods and visually grounded question answering. Research on adversarial machine learning has explored defensive strategies against various assaults, such as the object-based diverse input method and domain adaptation.,

7. Conclusion

As powerful language models like ChatGPT have revolutionized natural language processing, they must also contend with sophisticated adversarial assaults. Bolstering the security of these models necessitates the integration of reliable defense tactics. The proposed approach ensures that language models generate acceptable and harmless responses.

With AI evolving, it is crucial for experts to team up on developing robust and secure machine learning architectures for the ethical deployment of large language models in practical settings.