Introduction:

Deep neural networks have demonstrated remarkable progress in various applications. However, their training and deployment often come with significant computational and energy demands, leading to concerns about their carbon footprint and potential e-waste issues. In response to these sustainability and efficiency challenges, researchers are exploring techniques to reduce the size and complexity of neural networks.

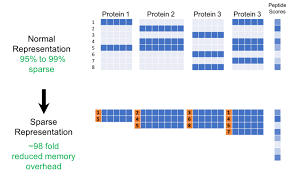

Sparsity emerges as a promising approach that involves explicitly or implicitly reducing the number of non-zero parameters in a neural network. This technique shows potential in significantly decreasing computational and storage requirements while simultaneously improving model performance. This paper aims to examine the current state of sparsity-inducing techniques and their applications in different machine learning domains.

Background:

Sparsity can be introduced in various ways, including pruning, quantization, and low-rank decomposition. Pruning involves removing individual parameters or connections based on their importance. Quantization reduces the precision of model parameters, and low-rank decomposition factorizes weight matrices to reduce the number of parameters.

Sparsity Algorithms:

- Structured Pruning: Removing entire neurons or layers to reduce parameters.

- Sparse Initialization: Initiating neural network weights with high sparsity.

- Column Sampling: Selecting a subset of columns from weight matrices to reduce input features.

- Binary Weights: Restricting weights to binary values to decrease parameters.

- Low-Precision Weights: Using lower precision weights to reduce bits required for representation.

-

Image by: http://tagkopouloslab.ucdavis.edu/?p=2147

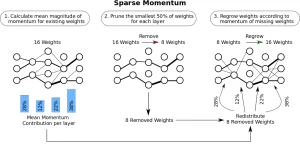

Sparse Training Algorithms:

Sparse training algorithms induce sparsity during the training process. They can be categorized into weight decay and gradient-based pruning methods. Weight decay adds a penalty term to encourage small weights, while gradient-based pruning removes weights with the smallest gradients.

Future Research Directions:

The choice of regularization coefficients or pruning thresholds significantly impacts sparsity and performance. More robust and automated methods for selecting hyperparameters are needed. Future research could focus on developing novel sparse training algorithms with additional constraints or inductive biases, such as structural or functional sparsity.

Conclusion:

The incorporation of sparsity techniques holds immense promise for achieving sustainability and efficiency in machine learning. By reducing computational and storage demands, researchers can develop more eco-friendly and powerful AI models. Collaborative efforts between academia and industry can further enhance the integration of sparsity into machine learning pipelines and promote environmentally responsible AI development.