Introduction:

In the contemporary landscape of virtual learning environments, ChatGPT has evolved into an indispensable AI language model, serving as a valuable tool for both students and educators. Nonetheless, apprehensions concerning privacy Techniques and data security have surfaced, thus necessitating the adoption of privacy-preserving methodologies to ensure secure interactions. This article delves into safeguarding your data while engaging with ChatGPT in online learning settings.

Comprehending ChatGPT in Virtual Learning Environments:

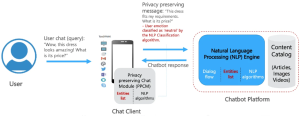

ChatGPT represents an advanced linguistic model engineered to provide real-time assistance and information during conversations. Seamlessly integrating it into virtual classrooms elevates the level of interactivity and engagement in the learning process. However, a keen awareness of the privacy implications entwined with such technology is of utmost importance.

The Significance of Privacy in Virtual Learning: In virtual learning environments, the exchange of personal information and sensitive data with ChatGPT is a common occurrence. Safeguarding this information becomes pivotal in upholding confidentiality and thwarting potential misuse. Students, parents, and educators should possess unwavering confidence that their data remains shielded throughout the learning journey.

Privacy-Preserving Techniques for ChatGPT:

Fortified Data Encryption and Storage: By implementing robust encryption measures, the data shared with ChatGPT can be shielded from any unauthorized intrusion. Furthermore, the employment of secure storage practices ensures that data resides in a safe repository, resistant to compromise.

Federated Learning for Augmented Privacy Techniques:

Federated learning emerges as a technique permitting ChatGPT to learn from diverse sources without directly accessing individual data. This approach heightens privacy as data remains decentralized and never exits the user’s personal device.

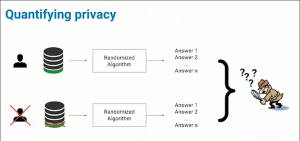

Differential Privacy Measures:

Differential privacy introduces noise into the data utilized by ChatGPT, rendering it arduous for the model to commit specific user inputs to memory. This technique safeguards individual identities while still furnishing accurate and valuable responses.

User Anonymization and Identity Protection:

To further augment privacy, ChatGPT can be engineered to erase user identities following each interaction. This approach ensures that the model does not retain any personal information that could potentially be traced back to specific users.

Striking a Balance between Privacy Techniques and Model Performance:

While privacy reigns supreme, it is equally vital to uphold ChatGPT’s performance and efficacy. Attaining the right equilibrium between privacy measures and the model’s capabilities guarantees an optimal learning experience without compromising data security.

Conclusion:

Incorporating privacy-preserving techniques into virtual learning environments stands as a critical imperative for utilizing ChatGPT securely. By implementing secure data practices, adopting federated learning, employing differential privacy measures, and anonymizing user data, we can safeguard sensitive information and foster a secure and enriching learning experience for all users.